Dr. Ganapathi Pulipaka 🇺🇸 on Twitter: "#AI Best: AMD + Nvidia Powers #HPC Perlmutter. #BigData #Analytics #DataScience #AI #MachineLearning #IoT #IIoT #Python #RStats #TensorFlow #JavaScript #ReactJS #CloudComputing #Serverless #DataScientist #Linux ...

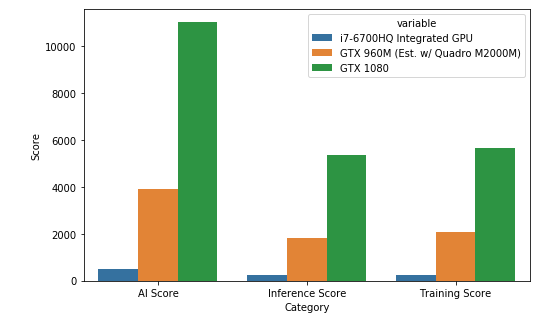

![Best GPUs for Deep Learning (Machine Learning) 2021 [GUIDE] Best GPUs for Deep Learning (Machine Learning) 2021 [GUIDE]](https://i2.wp.com/saitechincorporated.com/wp-content/uploads/2021/06/gpu-performance-in-deep-learning-chart.png?resize=580%2C339&ssl=1)

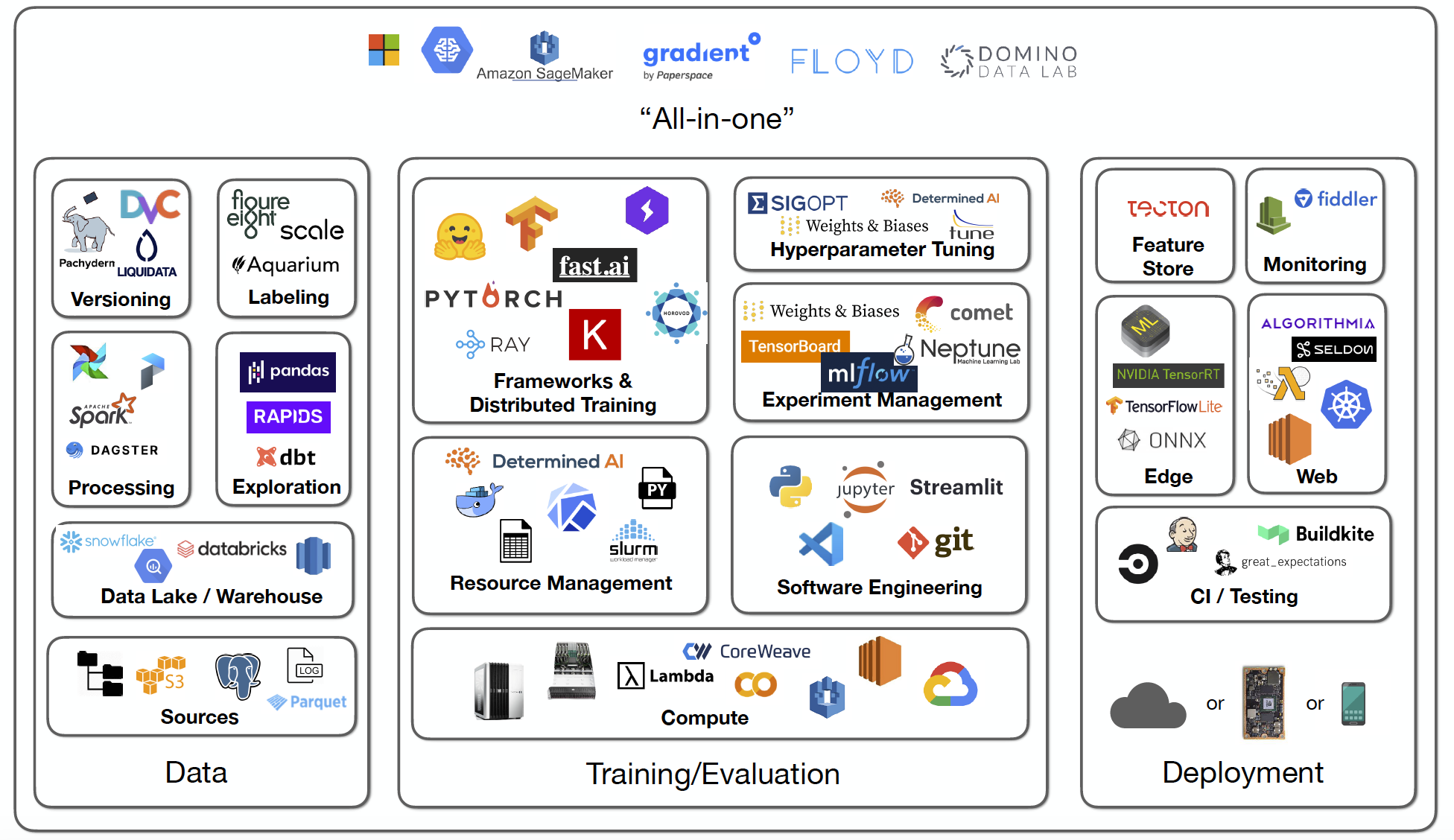

![Best GPUs for Deep Learning (Machine Learning) 2021 [GUIDE] Best GPUs for Deep Learning (Machine Learning) 2021 [GUIDE]](https://i1.wp.com/saitechincorporated.com/wp-content/uploads/2021/06/Best-gpus-for-machine-learning.png?fit=940%2C788&ssl=1)